In a time when everyone is adding AI to existing products, I have taken steps to reduce the generative AI in Push Training. Specifically, I have decided to reduce the reliance on OpenAI's text-generation API to produce dynamic coaching snippets for users on demand.

Background

Push Training is an app that delivers hyper-personalized workouts to the user, including adaptable workout structures, music, and audio coaching. Unlike other apps that use coaches who speak in general terms to all users, Push Training can provide audio coaching specific to each user at any given point in time.

General guidance provided in most workout programs:

"Let's start running between 6.0 and 8.0. If this is too fast for you, adjust to what feels like 75% effort."

Customized audio delivered to Push Training users:

"Up next, we have a speed of 7.7 and no incline. Let's go!"

Providing audio coaching specific to each user creates a significant execution challenge. Before generative AI, this would have required a person to sit in a studio and record every combination of speed and incline, along with variants for different included contexts.

"Get ready to push yourself even further! Next, we will be training at a speed of 7.7 and no incline, which is faster than your Personal Best!"

"Get ready to rock out to the song Bump, by Cushy at a speed of 7.7 and incline of 2."

With 170 supported speeds, 10 supported inclines, and 100 songs or additional context clues that may be included, there are hundreds of thousands of unique combinations of information that may be communicated to the user in any given coaching snippet.

The only way this can be accomplished in a reasonable amount of time is to leverage AI to 1) produce text snippets and 2) produce the audio output using life-like text-to-speech. This was the initial approach taken with Push Training, as documented here. While this enabled the app to get up and running quickly, additional challenges soon arose.

Common Challenges Relying on External APIs

A number of challenges arise when introducing complexity into your system, especially hard dependencies on external services. In the case of Push Training, the system used OpenAI's text generation APIs to produce coaching text snippets on demand, which were then fed through a text-to-speech pipeline, ultimately delivering the hyper-customized audio coaching to the client in a manner of seconds. The challenges for this type of external hard dependency are typically:

Increased cost. External APIs are rarely free, or if they are free, there are usually other constraints that you must then account for, such as strict rate limiting.

Increased latency. Requiring additional network requests to complete a task will almost always increase end-to-end latency, which will also contribute to the cost of the internal service.

Reduced visibility and control. External APIs can reduce a highly complex system to a simple API, allowing the API consumer to only care about the inputs and outputs. While this can be beneficial, it also means the API consumer has limited control over what exactly happens when the API is called.

Increased chance of downtime or errors. Each hard dependency introduces a liability. If an external API encounters downtime or produces errors, so will the internal service, which depends on the API. This problem compounds with each hard dependency. For example, if the internal API relies on 5 external APIs, each having a 99% success rate, the success rate of all APIs combined is 99%^5, which is 95%.

New Challenges Relying on Generative AI

While deterministic API outputs are always "correct," generative AI outputs are more subjective. A generative AI API may have an uptime of 99.999%, meaning the server executed the request and returned a valid response 99.999% of the time, but the contents of the response include some randomness and may not contain precisely what was expected.

The randomness and "mind of its own" is what makes generative AI so powerful. The primary input for generative AI is a prompt, which is what the user is asking the model to produce. You can give it the same prompt 100 times and get back slightly different answers each time as if you were asking 100 different people the same question. Following this example, a problem is that some people are lazy and may not answer the question, or some people may feel that part of your question is not relevant to the answer. This holds true for generative AI, at least in its current state.

Generative AI in Push Training

I started auditing the coaching text outputs delivered to users by reviewing the prompt/context provided to the text generation API and grading the text output on a scale ranging from Awful to Excellent. Over multiple months and after reviewing over 10,000 outputs, what I found was disappointing. Only 80% of coaching snippets met all the requirements outlined in the prompt. While the remaining 20% of the outputs weren't always incorrect, they often did not include all of the context asked for in the prompt.

Excerpt from the general prompt for all generated coaching snippets:

You are the coach for a interval training app for treadmill runs. You guide users through the workouts as they alternate between train and recover intervals. You must inform the user of upcoming incline and speed. You can only reply with a maximum of 50 words. Never count down...

Examples of prompt inputs and outputs, where the outputs are not incorrect, but are not considered passing:

...You must incorporate that the user has completed 6 intervals.

"Next up, you've completed 6 intervals so far. Get ready for an incline of 5 and a speed of 6.0. You've got this!"

Despite including all requested information, this text failed to pass review because it seems odd that a coach would say, "Up next, you've completed 6 intervals so far."

...You must inform the user the next interval is at their Personal Best speed.

"The next training interval is at your personal best speed. You're doing great, keep it up!"

The text is not incorrect, but it failed to include the upcoming incline and speed.

Examples of incorrect outputs:

"In 10 seconds, get ready for an incline of 5 and a speed of 8.0. 3, 2, 1, go!"

Output included "10 seconds" and a countdown after explicitly being told not to do so.

"You'll be running at a speed of 8.0 and an incline of 0 with the song <SONG> by <ARTIST>."

Output included tokens that it thought would later be replaced.

"Next up, get ready for an incline of 1 and a speed of 6.8. And don't forget to include the name of the workout!"

Output included part of the prompt rather than following the prompt instructions.

[ADDITION 24-04-11]: It appears Humane’s AI Pin also runs into the same issue of including the prompt in the response.

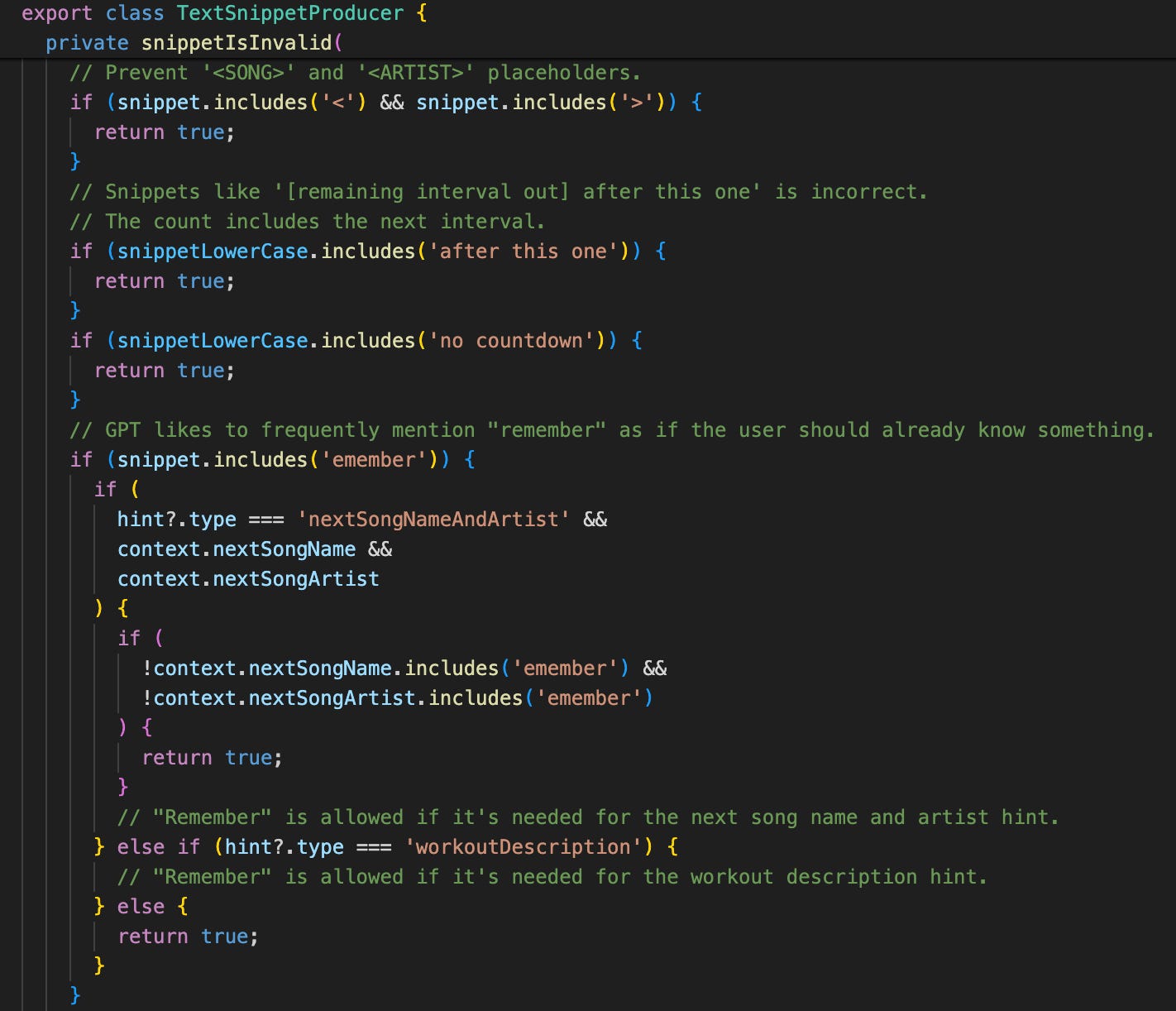

As I reviewed the text outputs, I modified the prompts and added code that checked for invalid outputs, but this quickly became a game of whack-a-mole. My code around the inputs/outputs continued to grow, along with retry logic and fallback outputs for when all else failed. One function grew to over 100 lines of if statements that checked for prohibited substrings.

Each time I patched the prompt, changed the provided context, or updated the validation and retry logic, I would feel the end was in sight, only to start seeing new issues appear—often caused by changes in the underlying model I was relying on. OpenAI is constantly making changes to improve their models, as they should, but this largely invalidates the previous work done to get the prompts in just the right spot.

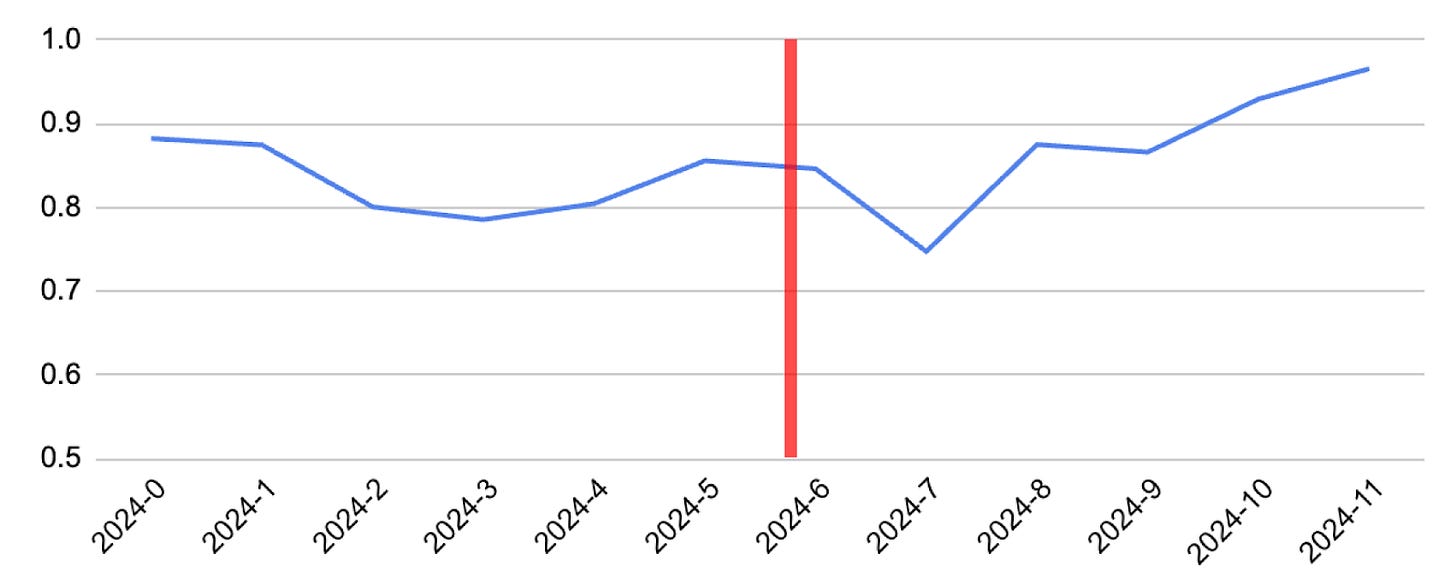

The image above illustrates the pass rate of text outputs over time, and the red line indicates an underlying model change. In this example, the 85% pass rate suddenly dropped to 75% simply because the underlying model was updated.

The Problem Compounds

Text generation is just part of the process when delivering personalized audio coaching to the user, and success rates compound. Along with reviewing the text output, I reviewed the generated audio output provided by a different service. The text-to-speech service has a number of reliability issues of its own, causing its pass rate to be relatively low. If we assume each of these two services has a pass rate of 85%, the success rate of a single audio snippet is 72%. With ~15 generated snippets per user workout, the success rate of an entire workout is 72%^15, or 0.7%.

The prompt adjustments and whack-a-mole strategy weren't cutting it.

My Solution: Reduce the Complexity

Those in the world of AI may be quick to point out several opportunities for improvements in my initial approach, but optimizing generative AI inputs and outputs can be a rabbit hole that I could not afford to go down. As an independent app developer, my job primarily involves putting out the most significant fires and looking for the biggest opportunities with the lowest-hanging fruit. Coach text generation is just a small part of the bigger picture, but it was the biggest fire at the time.

After spending hours a week reviewing generated text and audio outputs, I started to identify common patterns in passing outputs. In that case, why was I relying on generative AI if I only liked 20-30 outputs for any given input?

The simplest solution to put out this fire was to reduce the hard dependency on the generative AI API.

I identified the prompts that were often failing and slowly started adding if statements with string interpolation instead of calling OpenAI's text generation API. The logic now builds a response using different strings, such as combining an opener, the speed/incline, the context, and a closing word of encouragement. With different inputs, they get combined in different ways along with a small amount of randomness to prevent sounding too repetitive. This approach isn't exciting, but it has increased the text pass rate from 75% to 95%. This approach reduced the complexity of the system, provided a better end-user experience at a lower cost, and freed up my time to look for the next biggest opportunity. I'm still using generated text for coaching but in a reduced capacity.

The green line highlights reaching a 95% pass rate as I’ve been slowly making changes over the past few weeks. More work is needed, but a 100% pass rate is now within reach.

Conclusion

In software, it's rare to find an opportunity that reduces complexity while creating a better user experience at a lower cost. Usually, one is gained at the cost of another. In time, as generative AI continues to advance, I will definitely revisit powering all coaching text with AI with more complex inputs. The future is still bright for what can be delivered to the user using generative AI, and it's still being used for all text-to-speech, but for now, I can rest easy knowing my boring solution is consistently providing helpful coaching to the user.

Download Push Training to hear the coaching for yourself and see if you can identify which coaching statements are generated by AI and which ones are from my string builder.

Lastly, I’d love to hear what you think. Find me on LinkedIn if you see an opportunity that I’ve missed or have an idea of how the system could be further improved.